A Comprehensive Employee Data Modernization Led by Optimization Prime

Kardium Inc. engaged Optimization Prime to design and implement a modern, API‑driven integration between Dayforce HCM and Microsoft Dynamics 365 Finance & Operations. The initiative delivered an automated data pipeline, improved data quality, and a unified employee information ecosystem that supports both HR and operational workflows.

The engagement focused on resolving structural challenges within Dayforce, standardizing critical master data, and ensuring clean, high‑quality ingestion into Dynamics 365.

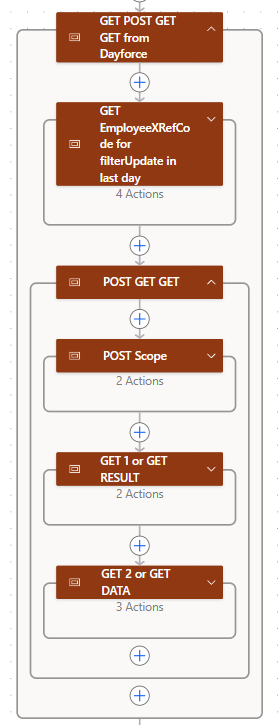

1. Dayforce Data Extraction

Retrieved JSON data from the Dayforce API using a combination of GET and POST actions, covering employee master records, employment history, positions, and organizational structures.

Identified and resolved inconsistencies in Dayforce’s data modeling, including nested and array‑based fields that required normalization for Dynamics 365 compatibility.

Applied completeness checks and business‑rule validations to ensure all required attributes, relationships, and reference data were present before transformation and load.

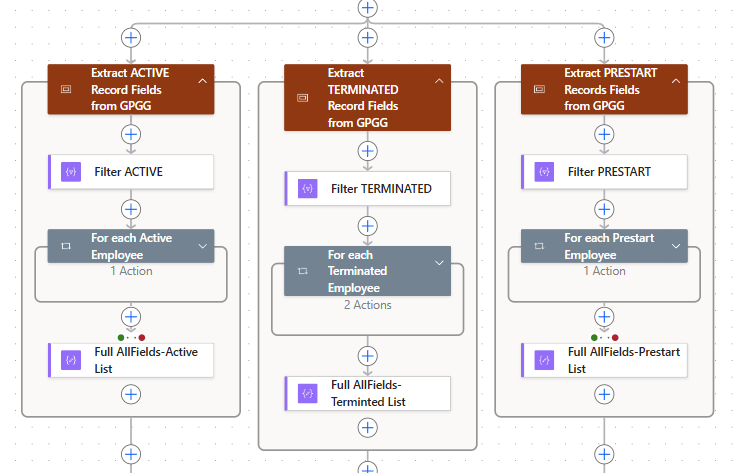

2. Transformation Layer

Standardized and normalized Dayforce JSON structures — including nested objects, single‑value arrays, and effective‑dated fields — to align with Dynamics 365 entity requirements.

Mapped Dayforce fields to D365 personnel, position, and organizational entities, resolving lookups, reference codes, and hierarchical relationships to ensure structural consistency.

Applied business‑rule logic and data‑quality checks to prepare clean, validated datasets for ingestion, including validation of job titles, departments, worker categories, and reporting relationships.

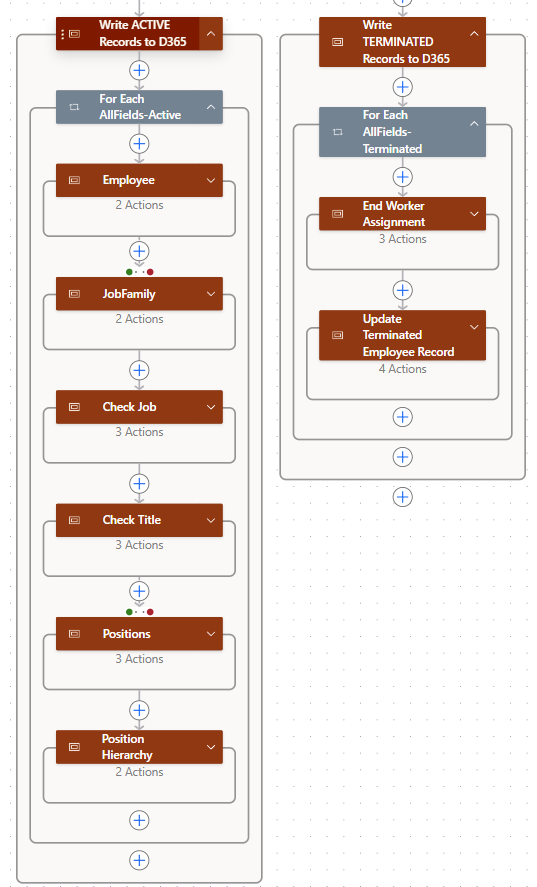

3. Dynamics 365 Data Loading

Loaded worker, employment, position, and organizational hierarchy data into Dynamics 365 using structured templates and controlled data‑import processes.

Ensured all lookup values, foreign keys, and reference entities were resolved prior to load, preventing broken relationships and maintaining model integrity.

Applied error‑handling and validation checks during import to identify missing references, inconsistent values, and structural anomalies, providing clear remediation paths for downstream corrections.

4. Development, Testing, and Deployment

Designed and implemented the integration workflow in a dedicated UAT environment, allowing iterative development, data validation, and stakeholder review without impacting live operations.

Adjusted and refined the workflow based on testing feedback, including updates to transformation logic, relationship handling, and data‑quality checks.

Promoted the finalized workflow to production through a controlled deployment process, ensuring reliability, traceability, and alignment with Kardium’s operational requirements.